UPDATE: Not all of what I wrote aged well so certain corrections are required. It was a right observation (described below) that in a zero-shot mode when a model creates code directly from an arbitrary prompt (i.e., it is not shown several text-to-code translation examples), GPT-3 (or similar models) that were not systematically trained on code performed rather poorly. However, a subsequent work by Open AI: Evaluating Large Language Models trained on Code" showed that if a model was pre-trained on lot of publicly available code then it acquired non-trivial coding capabilities often producing correct (or near correct) solutions purely from natural language. A more up-to-date perspective is in my more recent tweet.

Automation of software development is as old as the software development itself. Remember that we started writing programs directly in machine codes (and I personally actually have experience programming such a device). Then, a big productivity boost was achieved by writing assembly programs, which shortly followed by introduction of higher-level languages such as FORTRAN. The progress clearly has not stopped at that point and we see a great deal of improvement coming from better programming languages, libraries, and tooling. In the machine learning world, people started by writing their own CUDA kernels, but now we have dynamic tensor frameworks (such as Pytorch), where the neural computation graph is defined (and modified) by a Python program. These modifications can happen dynamically, i.e., during training or inference. There are a lot of smart ways in which the progress can continue in the future. But I think that a fully automated code generation from a natural language is not one of them.

What are the problems with automatic code generation? One big issue is that there is no reliable technology to understand and interpret the natural language. My strong belief is that sequence-to-sequence like neural networks or language-modeling approaches do not learn much beyond a probability distribution. These technologies are ground-breaking and there is nothing short of amazing that we can have a close-to-human-quality machine translation. However, these models do make stupid mistakes here and there. Humans do them too, but the output of a machine translation system is easy to post-edit and the scale of errors can be pretty small. What makes it possible is that the machine translation is (loosely speaking) a content-preserving process, which has a high degree of fidelity in terms of lexical, syntactic and semantic similarity between the source and the target. It also has a high degree of locality: A sentence-level translation, which does not take the document context into account, can sometimes be quite accurate. This is one of the reasons why the machine translation was possibly the first successful AI application, which was shown to work in a very narrow domain more than 50 years ago.

Many people think that post-editing of the GPT3 output would work as well as post editing of the machine translation output. However, software development is far from this idealistic view. Software is being created as a fusion of a developer's knowledge and common sense, user requirements, colleague advice and feedback. There is no well-defined source and no well-defined target. There is also a complicated feedback loop coming from debugging and testing. It is simply impossible to express these requirements through a reasonably small set of examples. Furthermore, writing out such examples is time consuming and error prone (there is no precise semantics in example-based programming). It is even more time consuming to fix the mistakes generators make.

I also strongly believe that the current AI systems can greatly help to improve the autocomplete functionality, but pushing the current tech to the level of a "pilot"-like automation is likely impossible. Unfortunately, much of the discussion on the topic is influenced by early testers whose positive feedback has been magnified 1000x while all the negative feedback has been largely muffled. Fortunately, the

EleutherAI and HuggingFace teams made a GPT-neo demo available to everyone and we can now poke it. We are interested in the SQL generation, which is the only code-generation option.

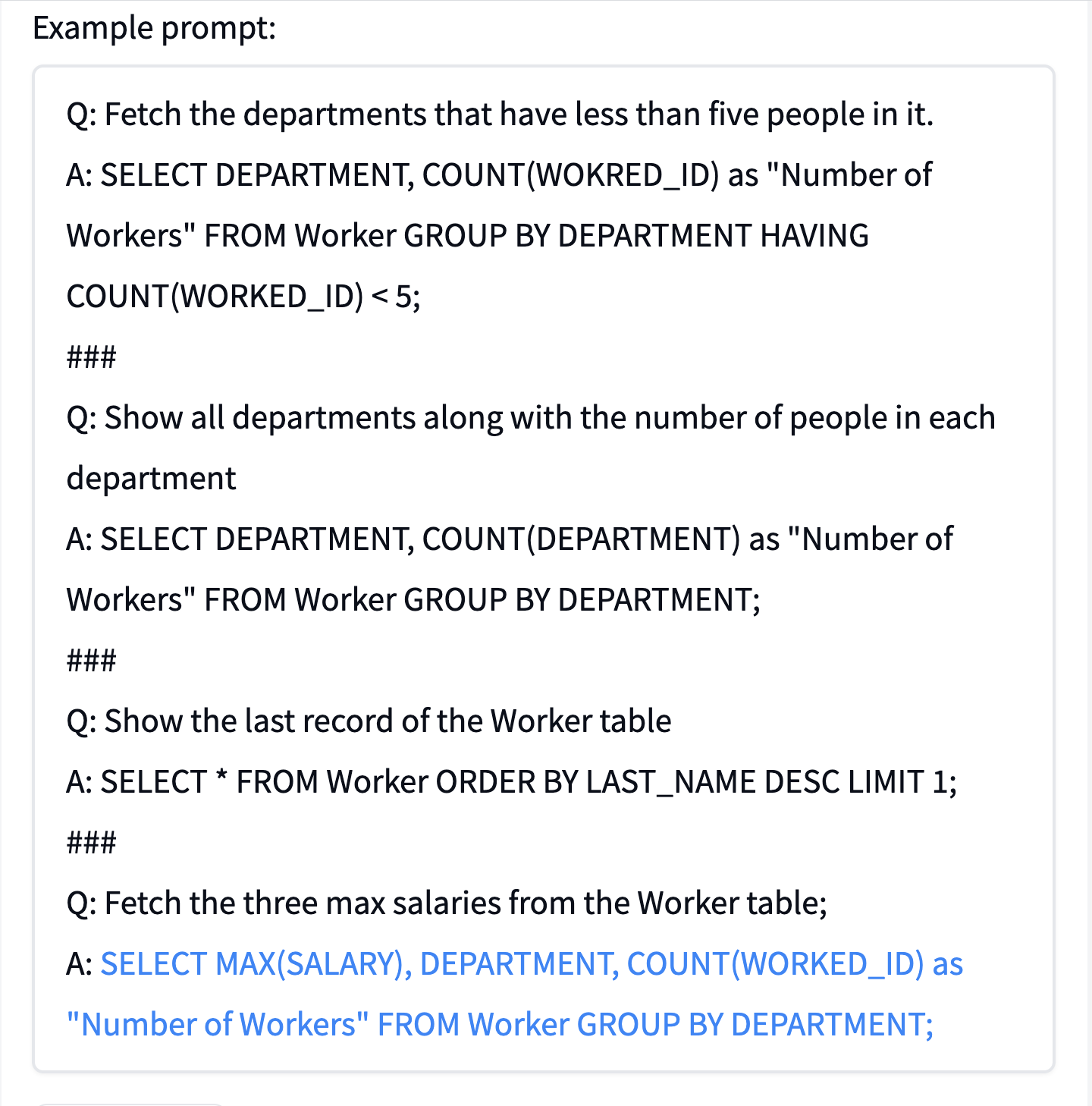

First, one can notice that the process is not deterministic: Each time you click "generate" the system may generate a unique answer. The original HuggingFace example asks to "Fetch the three max salaries from the Worker table". I clicked multiple times, but all of the answers seem to be incorrect. Sometimes, they are quite far from a correct one, check out, e.g., this example:

I also tried to replace the word "fetch" with the word "select" in the prompt, but the results were still disastrous. One may argue that EleutherAI/HuggingFace came up with the prompt that was too complicated. Thus, I tried a simpler version, which had more consistent prompts:

Q: Select worker IDs whose salaries are greater than one thousand.

A: SELECT WORKER_ID WHERE SALARY > 1000;

###

Q: Select worker IDs whose salaries are greater than 2345.

A: SELECT WORKER_ID WHERE SALARY > 2345;

###

Q: Fetch worker IDs whose salaries are greater than three thousands.

A: SELECT WORKER_ID WHERE SALARY > 3000;

###

Q: Fetch worker IDs whose salaries are between 1500 and 2500.

A: SELECT WORKER_ID WHERE SALARY BETWEEN 1500 AND 2500;

###

Q: Fetch worker IDs whose salaries are greater than 4321.

A:

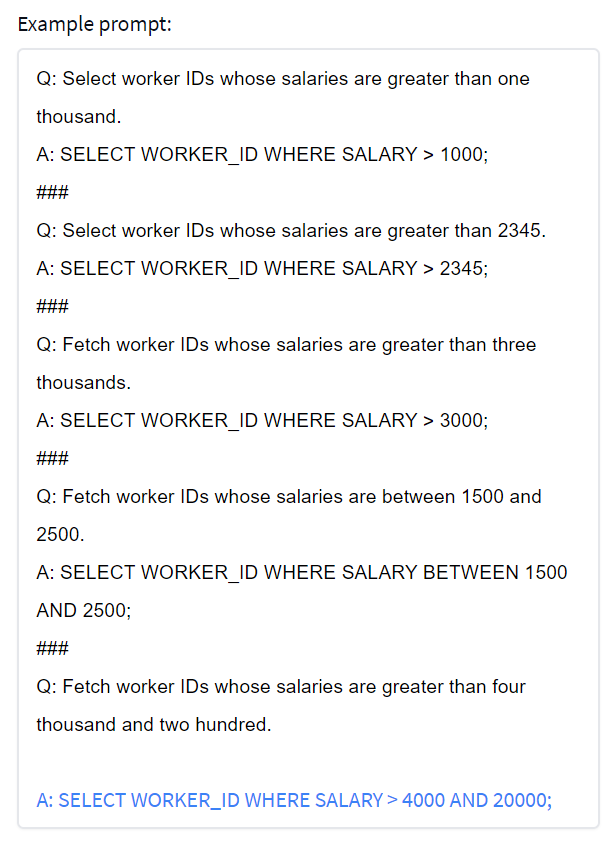

This prompt consistently generates a correct answer. However, the system still breaks easily, e.g., when I ask to retrieve salaries greater than four thousand and two hundred:

In conclusion, there is little doubt that language-to-code generation works in some toy cases, but in most other cases, it does not. I suspect that the technology is not there yet: It is completely unclear how the current predictive tech, which Metzler et al called dilettante, can be taken close to the level of accuracy of modern compilers, which is necessary, in fact, to be useful. Of course, the GPT-3 trained by Microsoft and OpenAI is better than the EleutherAI/HuggingFace GPT3, in particular, because it was trained in a semi-supervised fashion. Yet, its generation algorithm comes without any accuracy guarantees, which makes the model dangerous. In any case, I am looking forward to testing it, but Microsoft/OpenAI coding "co-pilot" is not open to the general public yet (there is a wait list).